hi guys I have a cousin who has autism and I want to make an application for thim to help him

but I am not familiar with the app

But what I want is something very simple

but I am not familiar with the app

But what I want is something very simple

I want an app that

insert category, sub category, with photos to and that's all

can you help me please???

can you help me please???

--

A very good way to learn App Inventor is to read the free Inventor's Manual here in the AI2 free online eBook http://www.appinventor.org/book2 ... the links are at the bottom of the Web page. The book 'teaches' users how to program with AI2 blocks.

There is a free programming course here http://www.appinventor.org/content/CourseInABox/Intro and the aia files for the projects in the book are here: http://www.appinventor.org/bookFiles

How to do a lot of basic things with App Inventor are described here: http://www.appinventor.org/content/howDoYou/eventHandling .

Also do the tutorials http://appinventor.mit.edu/explore/ai2/tutorials.html to learn the basics of App Inventor, then try something and follow the

Top 5 Tips: How to learn App Inventor

You will not find a tutorial, which does exactly what you are looking for. But doing the tutorials (not only reading a little bit) help you to understand, how things are working. This is important and this is the first step to do.

--

yes TO KNOW not say no but I will take me months to do can and year to do

I need something to lean there

also for you a toutorial will take you an hour

think that helps a child with a problem

thanks again

I need something to lean there

also for you a toutorial will take you an hour

think that helps a child with a problem

thanks again

--

yes exactly, first you have to learn the basics and then you can start creating your app

Maybe one of these old posts will steer you to something already developed?

--

I found a possible email address for the nooddll app author:

Maybe you will get lucky.

--

Here I am :) (Depends on which Google Account I am logged into!)

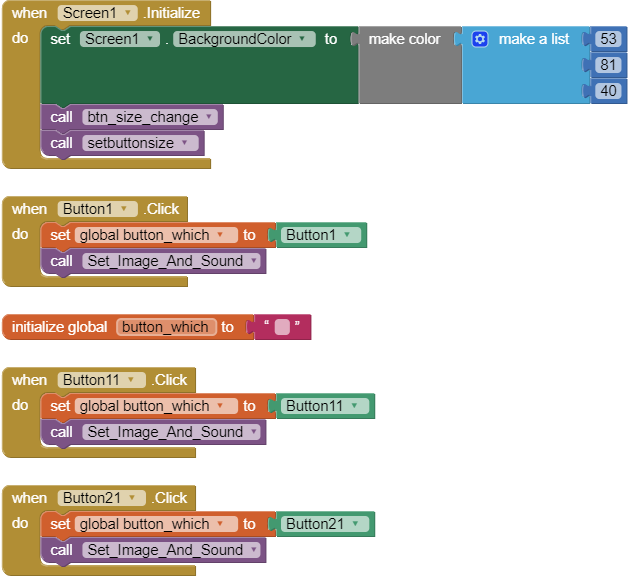

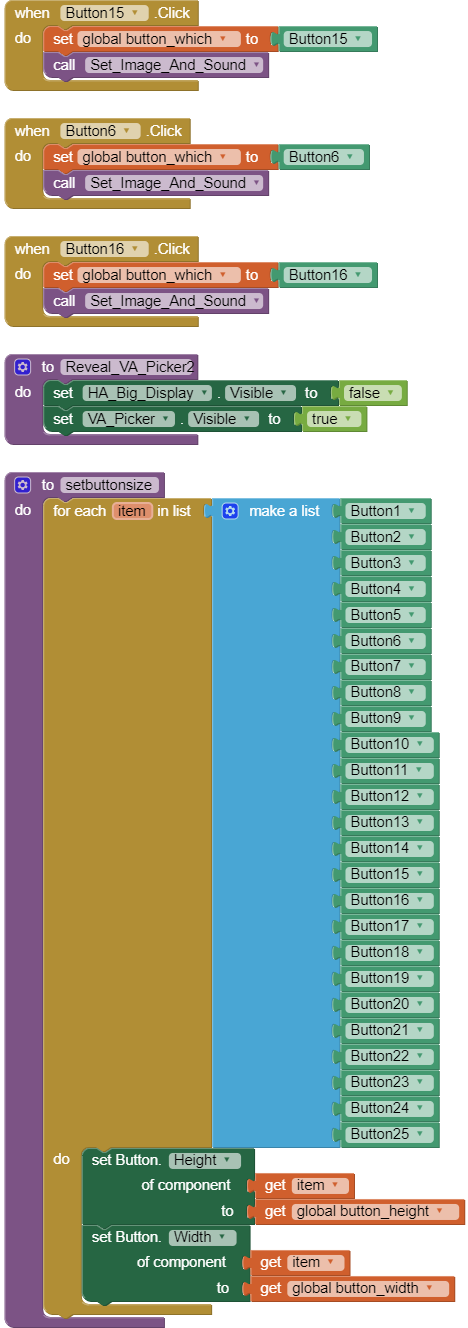

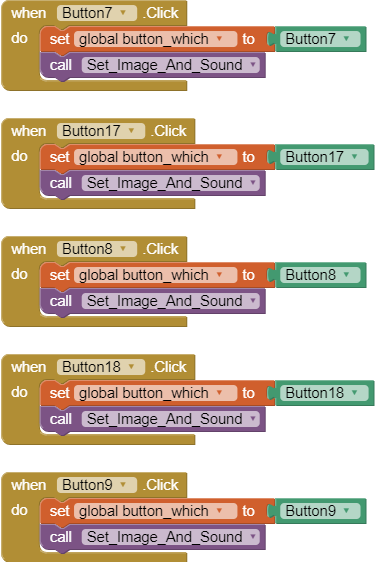

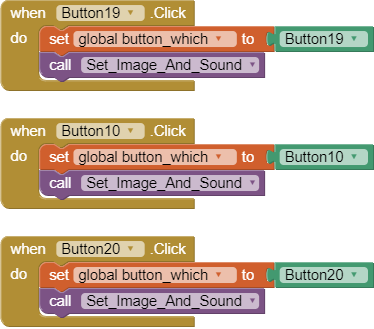

Attached aia of the COMS app for you.

Have spent the last four years developing simple apps for individual students with autism and severe learning difficulties, so happy to help on that score.

Tim (also Tim at Loddon)

--

o my good you are awesome thank you very much

--

Here is another idea that might be useful.

Landscape mode on a Samsung Tab II Tablet

The Communication Panel could be used to help older autistic individuals communicate and could be adapted for younger users. See the url that introduces that concept and where the free image that allows selection of core ideas came from (the link is in the app).

This app intended to aid communication with special needs individuals uses a Canvas to create '88' scrollable, virtual communication buttons. The app is presented as a proof of concept that could allow developers who create apps for special needs individuals to help with ordinary tasks. The app is based on a communication board described at http://praacticalaac.org/praactical/make-it-monday-manual-communication-boards-with-core-vocabulary/ A link in the app goes there and is recommended reading.

All the code in one place.

The communications board is a modification of an app I presented on the forum several years ago called 36-Buttons. This app has 88 buttons but only a single png image. A similar app could be made with larger images and a smaller grid. Each cell is numbered starting at the top of the Canvas:

1 9 17 25 ...

2 10 18 26 ...

3 11 19 27 ...

..............88

The 'numbers' (generated by the xyToButtonNumber procedure) are used to call the word list that is used with the TTS engine in your Android.

The x values on the Canvas are 11 cells (or images) x 8 cells. The images are part of a single png

It uses a fixed csv list internally converted to a list. The list could just as easily be constructed from blocks entirely or loaded by the File control. The single image (TELL_ME_core.png) is converted from a jpg file to png. A png's characteristics on rendering at different magnifications work better on this app. The image is 600 x 391 pixels (102 kb).

It uses Text to Speech (TTS), with the diction slowed from the default of 1 to 0.8 (see the Designer screen). Several words in the words list are purposely mis-spelled so that the speech engine properly pronounces them ... for instance read is changed to reed because the engine says 'red'.

Components are named with their default names. Screen orientation is Landscape and Sizing is fixed. Developers should experiment with Responsive sizing to achieve their individual goals. The app is intended to run on a Tablet. It was tested on the emulator and a Samsung Tab II. It will work in most phones although the images become quite too small for all but little fingers. Values in the xyToButtonNumber proceedure may have to be adjusted for different screen densities (dpi) for some devices. The values in the procedure must change if the grid values are other than 88 cells and has dimensions other than in the example. The number 8 shown in the example refers to the number of ROWS.

The app is not a finalized tool toward building a communications board for special needs individuals. The app contains several features that aid in the design of the app and are useful for developers. When you build your similar app, you will probably want to remove those features and use a different background. Getting and using the background images wisely is the most difficult part of this project. Be careful to avoid copyrighted images. If you have good graphical skills, you will probably spend most of your time designing the image grid.

Attached is an aia file that has the image and the embedded csv. The aia is 467 kb and will build to an apk about 1.9 MB.

I hope this example will prove useful to developers working with special needs people.

--

Thank you very much for the interest SteveJG for start i will stay at nooddl because I'm not good at programming which seems to be easier for me

-- |

Following your autism2 and 36-buttons method, how do you determine the values you divide the quotients by in the xytobuttonnumber procedure?

Is there a logical way of doing it, or by "knowledgeable" trial and error? They do not seem to directly relate to the pixel width and height of the background image?

I found, with the example you gave, I got better edge to edge results in my Nexus 7 emulator using 54.5 and 49 respectively. That said the accuracy of a mouse pointer is far higher than a pudgy finger!

I also noticed that Scott Ferguson used a "floor" on the final result in the procedure, does this help?

[EDIT]

Having just looked at this again, I can see that with the image at 600x391and with 88 cells 11 across by 8 down, 600/11 = 54.5 and 391/8 = 48.8, so this all makes sense, unless you have further to add :)

--

You essentially got it ( 600x391and with 88 cells 11 across by 8 down, 600/11 = 54.5 and 391/8 = 48.8 ). What is in the equation is dependent on the image dimensions (grid) and the number of rows across and down .

Scott recommended using a floor (essentially a truncation) which worked with smaller image dimensions but is totally inappropriate with larger images and device screens Go ahead and use trunc ...you will find the grid behaves

with the leftmost columns but not as you progress to the right. The if statement that ensures that the length of the list is never exceeded as one proceeds to the right and lower cells of the grid is there to prevent the procedure from 'overestimating' the grid cell when getting to the final column. I haven't tried using your 54.5 and 48 versus 52.6 and 50 might be better values. Perhaps will look later tomorrow. If you have issues ever, you might try preceding the Procedure code with a round or trunc or ceil block . Using one or the other might be helpful.

When I first worked with Scott's algorithm, AI had some issues scaling (well, it still does) but MIT fixed some of the issues so their algorithm using fixed screen sizing works better than it did at that time.

Another way of doing the Communications Panel or Board would be to use fixed sprites without images on the Canvas. I am not sure what 88 sprites would do, probably crater the compiler.

--

Thanks SteveJG, all makes sense.

To help the OP I was working on in my mind so far) using the 36-Buttons approach to replicate my simple COMMS Board app, and this indeed got me thinking about using fixed image sprites. I would only have @ 10 per canvas (as each image must be big enough for easy viewing/recognition on a tablet. Also thinking about zooming up an image sprite to fill the canvas, or zoom the background image. Plenty of options. Will have a play :)

--

Here is a quick (and unfinished ) example using the canvas approach with individual image sprites set on top of a grid. I truncate the filename of the image (which may need to be psuedo-phonetic) for the TTS.

Screens:

--

If you going to use that much screen real estate , and limit yourself to ten images why not place an image component to the right of the Canvas. Probably easier to show the enlarged image there?

Use a generic Procedure to show the larger image the sprite touched event would trigger.

Nice example and essentially what I suggested. Something also could be done by displaying all your images as a single image. Easy to do that in paint by cutting and pasting images. Then use the 'larger' image in the image control or in a second Canvas . The image could be zoomed by not checking the use actual size box or whatever it is and providing a png image with varying dimensions using a slider to vary the height/width of the displayed image. The Canvas would be more involved and require more code.

Enjoy your experimenting. We are certainly developing a stable of many methods developer's could use to work with images and selecting them. :)

--

Yes, I might have a go at a single image with many images, and zoom that up to just show one.

--

Went for the low hanging fruit though :)

Much is guided by the world of autism, (especially the area where I work, deep end!), the need to minimise distractions, hence why hiding everything else and just having one image on screen.

Some apps I have done are just showing one image, the student should pick this one image simply to demonstrate a choice, and that is it! (Teacher/Carer would then have to change the image for an other choice to be made later on)

--

--

--

The discussion about the best board set-up is very interesting to me and I want to chime in. I have been working for quite a while on an app that can handle Blissymbols (https://en.wikipedia.org/wiki/Blissymbols), a visual language used by a shrinking group of people, mainly in Sweden, Canada and Belgium. I have seen it used by a group of people in Belgium sitting in wheelchairs, some are severely retarded, some are only able to communicate by moving their head. Most cannot speak. They have large touchscreen PC's in front of them that display these Blissymbols and that talk when touched. Most know a few hundred words and I saw some wonders of communication with the help of family and speach therapists.

I wanted a setup that would allow me to handle any number of blissymbols (there are about 6000) and organise them for individual users. Also, I needed several languages to be supported, primarily Swedisch, English and Dutch. My current version can switch between 15 languages.

My reason for entering into this discussion is my setup of the board: the tablet version has 40 canvases with 40 sprites on them in a table arrangement. It is hell to set up, but when done, the canvases and the sprites are easily addressed. I do not have a version right now that I can easily share unfortunately.

For a new version that I am building, I think that I actually do not need the sprites, but I do need the canvases because you can write text on them (words in the chosen language in my case). Also the background color may vary, depending on the country where used and the type of word. The pictures can be the background picture on the canvas.

The attached picture shows the main board with two sets of symbols. The arrows or the list picker can be used to go to a different set. I had about 200 words loaded at the time. The pictures are preloaded (and are not assets in AI), but take very little space as transparent .png images.

--

You have hit the nail on the head in your first sentence: "the best board set-up"

The best board setup is the one that works best for the individual. So in the right setting, you use the right board.

This is the reason why my Head Teacher targeted me (given I had let on I knew "something" about IT) and said: " I have seen all the AAC software available, but none of it is suitable for our students, make me something like this!" (Hands me a drawing) The autism spectrum is so wide and varied, and even more so, when extended beyond autism into learning difficulties or physical disabilities, that no single tool will suit all users, This, I guess, is good for developers, but does beg the question about finding the right base or model upon which to build for multiple scenarios: buttons or canvas, simple, no distraction stuff, or animated and visually exciting.

What is great is seeing the results, and how it helps our young people communicate - even at a very basic level and get their needs met.

Could go on for hours :)

--

댓글 없음:

댓글 쓰기